Category: Machine Learning

Logistic Regression with Titanic -ODSC 2017

At the Open Data Science Conference in Boston held on May 3rd, 2017, Colaberry presented an introductory workshop on Data Science with Python. This involved teaching Python and libraries needed for Data Science followed by Logistic Regression with Titanic. This was done with the help of our online self-learning platform: http://refactored.ai You can still sign …

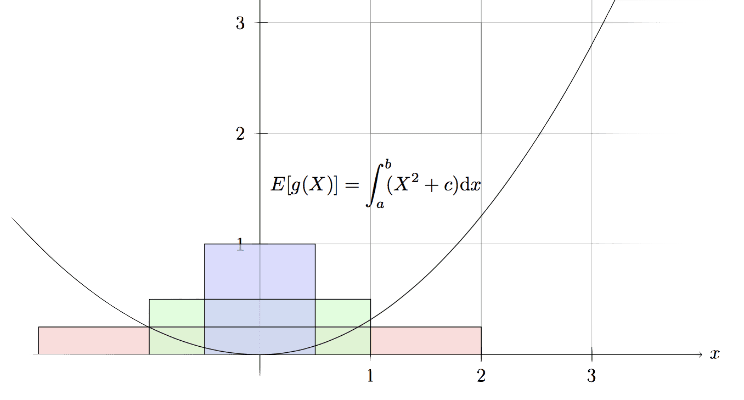

Jensens Inequality hat Guarantees Convergence of EM Algorithm

Jensens Inequality that Guarantees Convergence of the EM Algorithm. Enjoy Colaberry blogs which cover this and many other cutting-edge topics. Jensen’s Inequality states that given g, a strictly convex function, and X a random variable, then, Here we shall consider a scenario of a strictly convex function that maps a uniform random variable and visualize …

Read More “Jensens Inequality hat Guarantees Convergence of EM Algorithm”

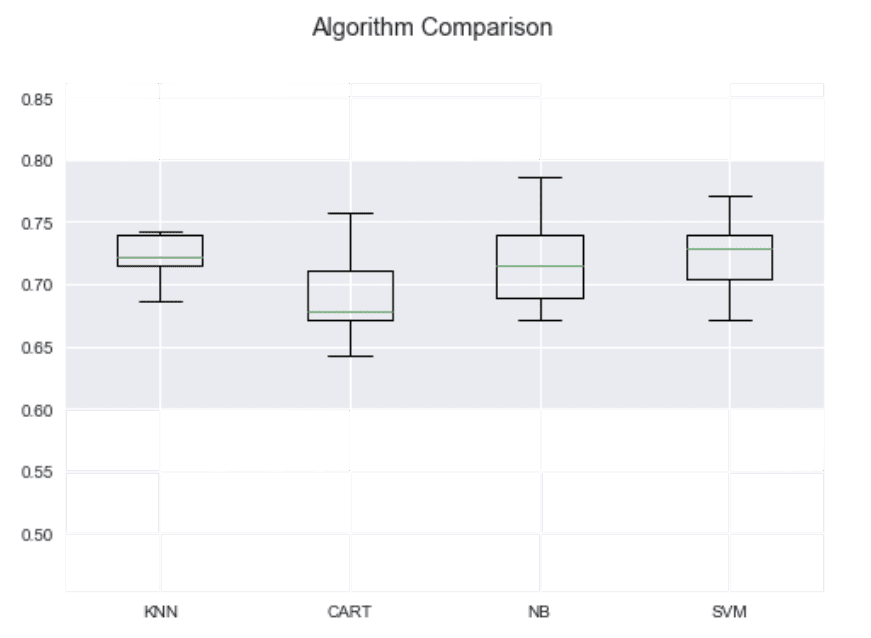

Building An Efficient Pipeline-AI Summit 2017, St. Louis

At the stamped con, AI Summit in St. Louis, Colaberry Consulting presented how to take a complex idea in the AI domain, apply ML algorithms to it, and deploy it in production using the refactored.ai platform. More details are available including code examples on the platform. This, as we see, is a common area of …

Read More “Building An Efficient Pipeline-AI Summit 2017, St. Louis”

Why Bayesian Formulations Are Better Than Maximum Likelihood Estimates?

Find out why Bayesian formulations are a better option than maximum likelihood estimates for data analysis. Read our blog post for valuable insights and examples. Maximum Likelihood Maximum Likelihood Estimation (MLE) suffers from overfitting when the number of samples are small. Suppose a coin is tossed 5 times and you have to estimate the probability …

Read More “Why Bayesian Formulations Are Better Than Maximum Likelihood Estimates?”